Abstract

Panorama has a full FoV (360°×180°), offering a more complete visual description than perspective images. Thanks to this characteristic, panoramic depth estimation is gaining increasing traction in 3D vision.

However, due to the scarcity of panoramic data, previous methods are often restricted to in-domain settings, leading to poor zero-shot generalization. Furthermore, due to the spherical distortions inherent in panoramas, many approaches rely on perspective splitting (e.g., cubemaps), which leads to suboptimal efficiency.

To address these challenges, we propose DA2: Depth Anything in Any Direction, an accurate, zero-shot generalizable, and fully end-to-end panoramic depth estimator. Specifically, for scaling up panoramic data, we introduce a data curation engine for generating high-quality panoramic depth data from perspective, and create $\sim$543K panoramic RGB-depth pairs, bringing the total to $\sim$607K. To further mitigate the spherical distortions, we present SphereViT, which explicitly leverages spherical coordinates to enforce the spherical geometric consistency in panoramic image features, yielding improved performance.

A comprehensive benchmark on multiple datasets clearly demonstrates DA2's SoTA performance, with an average 38% improvement on AbsRel over the strongest zero-shot baseline. Surprisingly, DA2 even outperforms prior in-domain methods, highlighting its superior zero-shot generalization. Moreover, as an end-to-end solution, DA2 exhibits much higher efficiency over fusion-based approaches and enables a wide range of applications.

Methodology

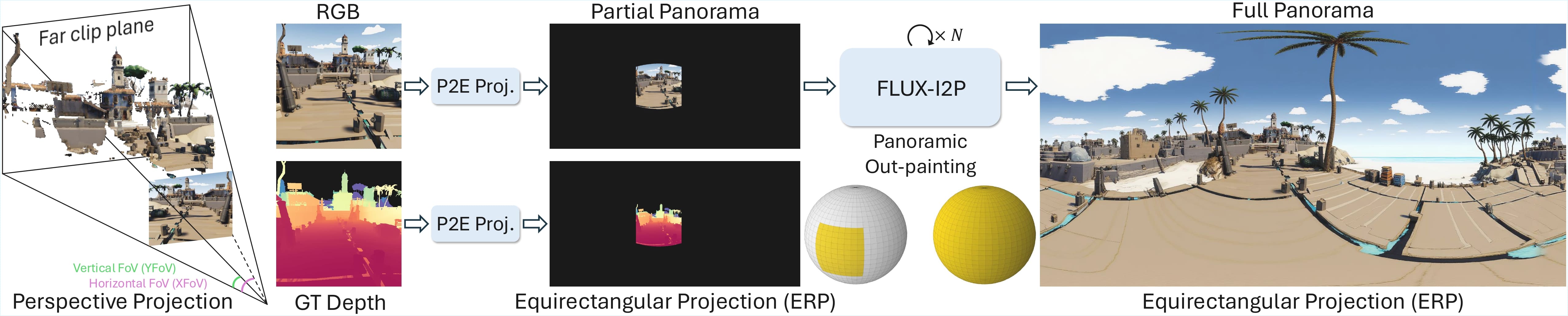

Panoramic data curation engine.

Panoramic data curation engine. This module converts high-quality perspective RGB-depth pairs into full panoramas via Perspective-to-Equirectangular (P2E) projection and panoramic out-painting using FLUX-I2P. It creates ~543K panoramic samples from perspective, scales the total from ~63K to ~607K, (~10 times), forming a solid training data foundation for DA2. The highlighted area on the spheres indicate the FoV coverage.

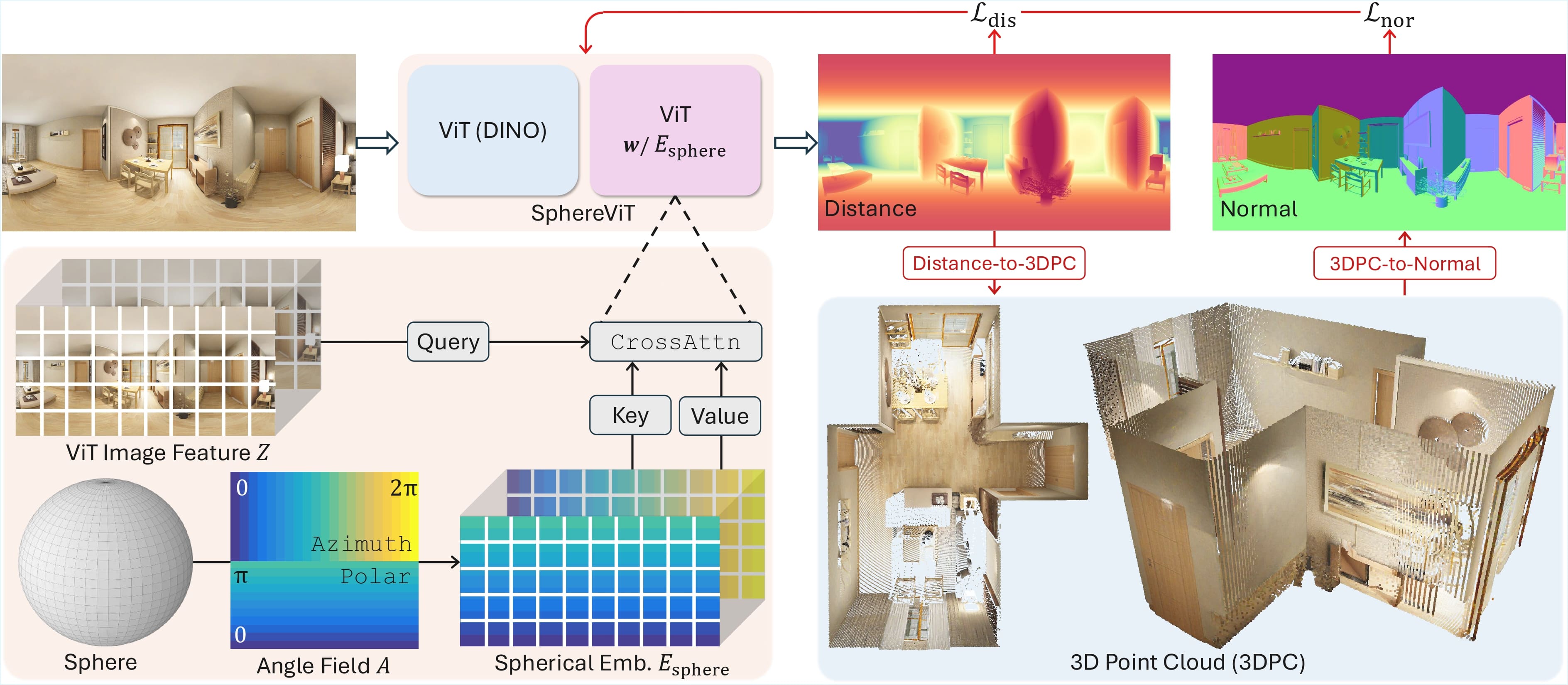

Sphere-aware ViT and Its Training Losses.

SphereViT and its training losses. By leveraging the spherical embedding $E_\text{sphere}$, which is explicitly derived from the spherical coordinates of panoramas, SphereViT produces distortion-aware image features, yielding more accurate geometrical estimation for panoramas. The training supervision combines a distance loss $\mathcal{L}_\text{dis}$ for globally accurate distance values and a normal loss $\mathcal{L}_\text{nor}$ for locally smooth and sharp surfaces.

Experiments

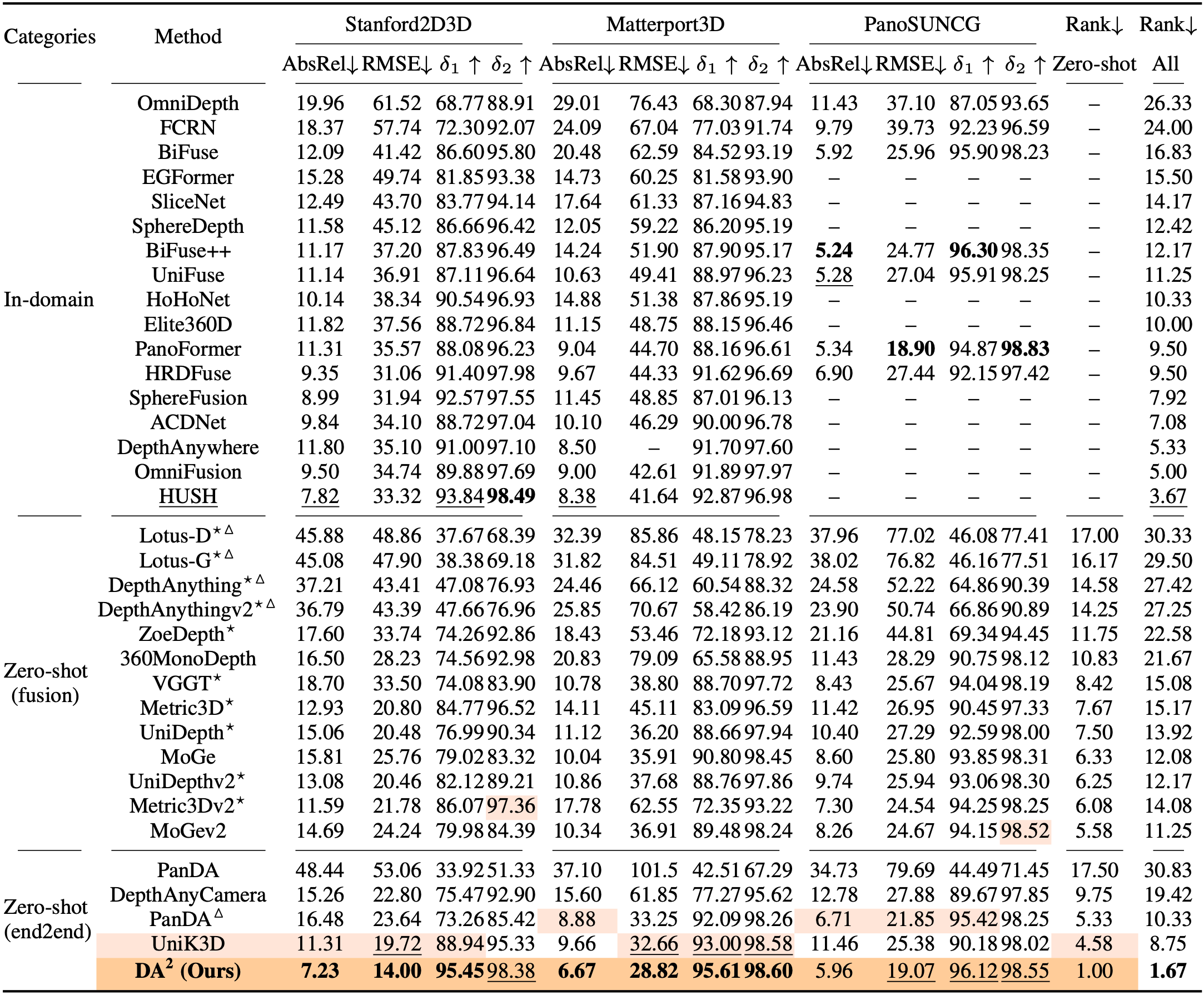

Quantitative comparison. For a fair and comprehensive benchmark, we include both zero-shot / in-domain, panoramic / perspective approaches. The best and second best performances are highlighted (in zero-shot setting). In all settings (both zero-shot and in-domain), the best and second best performances are bolded and underlined. DA2 outperforms all other methods no matter in zero-shot or all settings, particularly showing large gains under the zero-shot setting. Median alignment (scale-invariant) is adopted by default. △: Affine-invariant alignment (scale and shift-invariant), for prior relative depth estimators: DepthAnything v1v2, Lotus, and PanDA. ★: Perspective methods implemented by ourselves following MoGe's panoramic pipeline. Unit is percentage (%).

Please refer to our paper linked above for more technical details :-)

BibTeX

Please consider citing our paper if you find it useful in your research :-)

Please note there is a blankspace (" ") between "DA" and "\$^{2}\$" in the BibTeX🥹

@article{li2025depth,

title={DA $^{2}$: Depth Anything in Any Direction},

author={Li, Haodong and Zheng, Wangguangdong and He, Jing and Liu, Yuhao and Lin, Xin and Yang, Xin and Chen, Ying-Cong and Guo, Chunchao},

journal={arXiv preprint arXiv:2509.26618},

year={2025}

}